Studying Item Response Theory

Hi everybody, I am happy to be back on my blog to talk about something new and interesting, at least to me it is! Recently I have been so busy with routine works that I had little time to focus on my personal projects, which is something I definitely need to change in the upcoming weeks. Taking by remorse, I decided to force myself to start looking at some new and interesting research lines which could be linked to my current expertise.

Well, it was since last year that I wanted to dedicate more time to study and learn about the theoretical statistical framework of Item Response Theory, which I find really fascinating. So, last week I started giving myself a bit of time to review some of major handbooks and manuals on the topic, which I only knew in a partial way. Specifically, given my involvement in trial-based analayses, I was quite familiar with concepts such as validated multi-item questionnaires, latent constructs, Likert scales and so on, but I never delved into the whole IRT topic due to time constraints and other priorities until now. I am still reviewing lots of literature as I feel there are concepts/notions which I do not grasp very well yet, but recently I have been reading the Handbook of modern IRT by Wim J. van der Linden, as a general manual providing the basics of the theory and most popular modelling approaches.

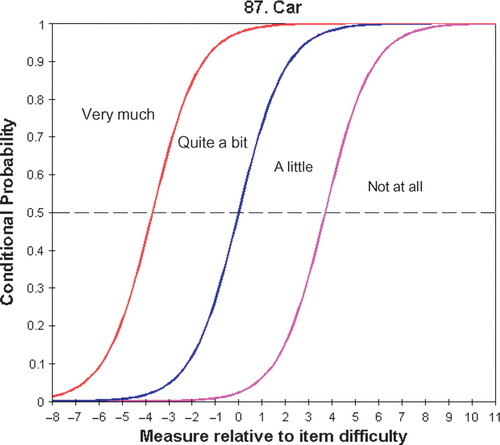

Very briefly, what I learned so far is that IRT theory was born as a way to theoretically and, most importantly, mathematically link the probability for a respondent to give a specific answer to an item within a questionnaire to an underlying latent construct or ability. There is a variety of model classes, each associated to a specific type of item question (e.g. binary, ordinal, categorical) and, within these classes, a there is a range of different model specifications each associated with different assumptions to provide an estimate of some latent abilities of interests while also trying to control for the influence of some other nuisance parameters (e.g. item difficulty) through a rigorous specification of the mathematical functions linking these two types of parameters. I have still lot to cover, but the premises so far are very exciting to me as this can be genuinely seen as a latent analysis problem where an unobserved parameter is estimated by assuming an inherent inductive approach in which observed data (i.e. individual item-answers) and are used to learn something about the latent parameter. Does this sound familiar to you? well to me damn yes, this is the basis of the Bayesian analysis! In fact, Bayesian analysis of IRT data is very popular as their inherent hierarchical structure perfectly matches the flexible Bayesian modelling framework where parameters are random variables to which distributions are assigned to represent their level of uncertainty. This is done prior to observing the data, which can be extremely helpful to incorporate external assumptions about these parameters in the model, as well as after observing the data, after updating the prior in a rational and coherent way (i.e. through Bayes’ theorem). This natural advantage of the Bayesian approach and the notorious difficulties that standard frequentist approaches encounter when running very complex and hierarchical models make the adoption of the Bayesian philosophy not only intuitive but also very practical.

I think that there is so much potential to work on this topic and to see if there is room to expand the current methodology in some new directions. Of course, I am aware that many different Bayesian models have been applied to these types of data but I feel that there could be space for opening up some new research opportunities that have been only rarely touched before. For example, when collecting questionnaire data, especially self-reported ones, missing values are common and almost inevitable. This further complicates the analysis since assumptions about these unobserved answers must be made when running any type of model. In most cases I see that standard and simplifying assumptions, such as missing completely at random (MCAR), are made to avoid any terrible increase in model specification. Although certainly possible in some cases, such assumptions are not very likely to hold in realistic scenarios (e.g. it is unlikely that people who did not answer at some items are not systematically different from those who completed the questionnaire). So, what if I would like to explicitly state the missing data assumptions within the IRT framework? for example including a selection or pattern mixture model to assess the impact of informative missingness assumptions on the results? This for sure becomes a nightmare from a methodological perspective but I really think it is our duty to evolve this field to improve the methods.

Possible new research work? grant proposal? could be. I just need to find the time to look at this with calm and critical thinking. Is there anyone out who might be interested in a joint work on this? I am always available!